About 100 years ago in Internet time—that is, last month—we were all discussing POSIWID. Scott Alexander wrote a take, starting the discourse. I wrote a fire-and-brimstone reply. He replied, not as fiercely: 'basically agree'. Disappointing.

I did, however, learn a lot by reading and discussing in the comments, and ended up coming to the following realisation:

POSIWID isn’t literally true;

And that’s why it works.

Allow me to explain.

The only reason we started discussing whether POSIWID—the assertion—is true, is because POSIWID—the meme—spread. And at least part of the reason that it spread is that it isn’t true, that it simplifies too much.

Not being true makes POSIWID, as an assertion, fail. But it also makes POSIWID, as a meme, work: it spread!

In this case being literally wrong is what made it work.

In the followup to his original post Scott suggests that POSIWID—the assertion—is not fully precise and that people should use the more precise:

“If a system consistently fails at its stated purpose, but people don’t change it, consider that the stated purpose is less important than some actual, hidden purpose, at which it is succeeding”

But would you say this? Would anyone? This more precise version—precise because it doesn’t simplify—would never work: it would never spread enough to merit being discussed. And we know that it wouldn’t because it didn’t: Scott isn’t the first person to come up with a more precise formulation and, yet, “POSIWID” is what we ended up discussing, because it’s the version that ended up spreading.

In this case, the only way to achieve a memetic goal—i.e. to shift people in your cluster away from attributing good-intent when it wasn’t there—was by being literally more wrong.

So two questions got conflated. One: is POSIWID—the assertion—technically correct, at literally all times, in a sort of a-historical way? And the answer is: No, it is not. The second is, despite that, is it right to spread POSIWID—the meme? And the answer to that is. It depends.

Scott had said, in his follow-up, that his steelman for the people using “POSIWID” is that they were hoping to move people from the Naive position, of overly attributing good-intent, to a Balanced position, closer to attributing it the right amount. But, he continues, “the last person to hold the Naive perspective died sometime in the 1980s, and in real life POSIWID is mostly used to push people from the Balanced to the Paranoid perspective”.

Now that is as clear a case of selection bias (the cute plane) as anything I’ve seen.

Maybe it is true of Scott’s friends/simcluster. Maybe POSIWID is the wrong thing to share there. But whether shifting your cluster away from wrong attributions of missing good-intent is the correct move (that is, whether spreading POSIWID, the meme, is the correct memetic move) is a contextual, empirical, and dynamic question that depends on what your cluster is, which way it is currently erring (i.e. towards false positives or towards false negatives?), and at what time the question is asked (as the answers to the previous two questions will change over time).

It changes over time because the way being erred in isn’t fixed. To see that we just need to go back in time 40 years to when the “inverse-POSIWID” meme was coined: Hanlon’s Razor.

Hanlon’s razor says: “Never attribute to malice that which is adequately explained by stupidity.”.

We can infer from its appearance and spread that it emerged at a time where too much bad-intent was being incorrectly attributed, and that it took off because it worked as a corrective for that.

It worked so well, in fact, that we’ve since overcorrected: too much good intent started being incorrectly attributed, creating the fertile memetic ground for POSIWID to emerge as the opposite corrective.

It’s hard to get these things—estimates of ill-intent in a group—right because they’re the kind of thing that hide and thus the kind of things you gotta infer. Society writ large will, thus, always err, in either one direction or another: either attributing ill-intent where there is no such thing, or attributing good intentions where they’re lacking. In erring the first way it creates memetic demand for a meme like Hanlon’s Razor to take off, as it did. In overcorrecting and starting to err in the second way it created the opportunity for POSIWID to take off and spread, as it did.

And because how much ill-intent there is also varies over time the whole thing ends up forming a dynamic system that changes through time.

The graph assumes a fixed level of ill-intent but that’s just my lack of graph making abilities. In all likelihood it varies, and attribution trails it: when no one attributes ill-intent there’s the greatest reward/cost ratio for abusing good-will with the least likelihood of being caught. Eventually people wisen up to it and information spreads in the form of memes. Society corrects, then it overcorrects, and round and round we go.

So the idea is this: as the bias in attribution historically oscillated between over-attributing and under-attributing ill intent, heuristics like Hanlon's Razor and POSIWID emerged as corrective forces to push the system back toward interpretive balance. The whole thing—quantity of ill-intent vs attribution and corrective memes—forms a feedback loop where each meme works as a context-sensitive heuristic and becomes dominant when the system drifts too far one way.

Neither meme—neither Hanlon’s Razor nor POSIWID—is a-contextually, that is, fully, technically, literally correct. Because fully, technically, literally correct things don’t become memes, because they don’t spread. Mass communication always entails mass simplification.

Or, said another way, both memes are noble lies.

Being lies neither meme is, thus, defeasible on its own. Both are instances of what @suspendedreason called torque dynamics: memetic attempts to push discourse one way or another. Both plausibly good-intentioned. Both selected for being oversimplified, because that’s what spreads. And both selected for giving themselves to scissor statements, as that’s what spreads.

They’re both—POSIWID and anti-POSIWID (Hanlon’s razor)—best modelled as moves in a discursive game that aims to shift an equilibrium the way that the person stating them thinks is necessary. They both pose as objective statements, but that’s only because simplified statements are what spreads best and, thus, what works better at shifting discursive equilibria.

Think of a meditation master that tells one student seeking “The Right Way” to go left, and another to go right. He doesn’t intend to lie, but it’s impossible for him to do what is being asked of him: to give objective coordinates to something that can only be expressed relatively. He has to simplify his message into the kind of abstraction his students could make sense of. They each get a different answer because the first was right of the target, and the second was left.

Now, this brings up the question: what about the people who are spreading and opposing the POSIWID meme? Are they like the meditation master above? Are they all knowingly pretending to make statements of fact because they know that’s what’s best at shifting people relatively?

Was Scott deliberately doing this—being literally more wrong in the service of a higher memetic goal, as he perceives it—when he wrote his OG take? Was I? Is everyone else? Great question. And, again, an empirical one. We’d have to check one by one. I originally argued a particular side and realised what I’m writing here by discussing it since. So I did not. I wasn’t playing “at that level”. But many people are.

Ever since I realised this—that people use what seem to be factual claims to shift people relatively—I not only see it all the time, but also see people “admitting” to it all the time, in the wild (i.e. on Twitter).

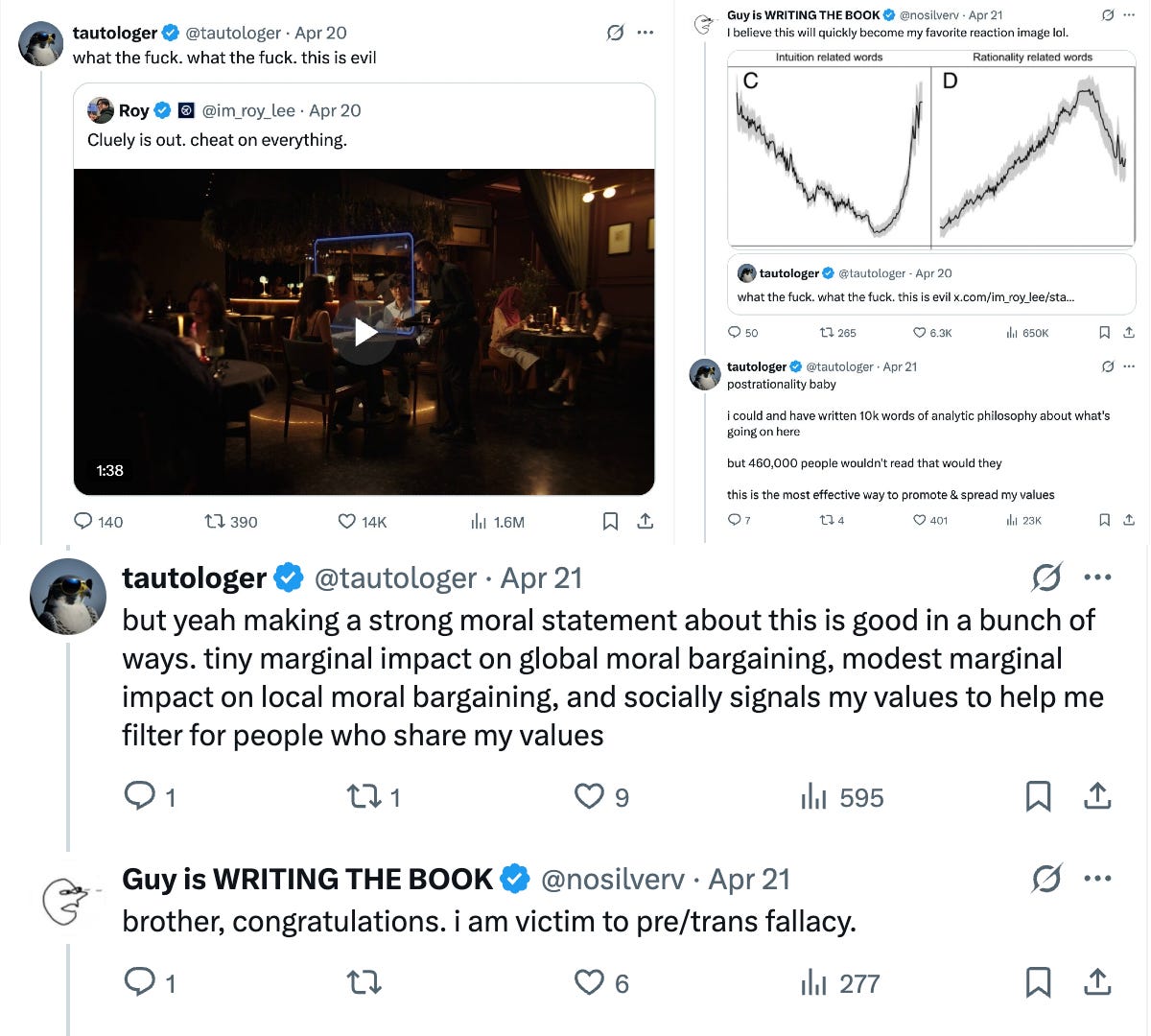

The first was @tautologer calling Cluey—a software to use AI to “cheat on everything”—”evil”. I dunked on him for not making an argument to support his claim (Ironic.). He explained that making a strong moral statement got more people to read it and was the most effective way to promote and spread his values.

Then this—”arguing on twitter is about negotiating norms”. Not being correct, not reaching the truth, not figuring out what’s true. “Negotiating norms”.

And finally, this, by @ItIsHoeMath

I don’t [...] care if it’s true. I don’t prioritize the truth values of facts when I post, I prioritize the communication value.

These are all best modelled as conscious and deliberate interventions in the discourse. People who are playing at a “higher level”. Not playing at the 0th level of straightforwardly arguing what they think is true, but consciously trying to steer by making claims meant to directly alter what others think is true, on the margin.

And, once you see it, you can’t unsee it.

Thing bad. Thing good.

What Is the Purpose of a Guy Blogpost?

Now, I understand what I’m claiming here leaves me in a tough spot: how does my post fit in this discursive game?

To answer that, we have to go back to the dynamic system I modelled above. I simplified at the time, but it was was based on predator-prey dynamics.

Basically: if you have lots of prey, predators have more food, so they increase. But too many predators eat too many prey. Prey disappear and predators start starving as a result. Their numbers drop and, with fewer predators, prey recover. The cycle starts all over.

Above the model was that if people are too cynic there’s ground for a corrective meme to develop. If they become too naive then that’s ground ground for an opposite corrective meme to develop.

We also saw how memes that work—that is, that spread—by posing not as the correctives that they are but, rather, as how things are; and how some meme-spreaders are consciously and deliberately taking advantage of this.

The problem with this discursive equilibrium is that it only works as long as long as the population of naive people (prey) who take the statements of strategic meme makers (predators) at face value is large enough. That is: what strategic meme makers are doing only works insofar as it is not generally known that that is what they’re doing. It only works in a context where most people are, or most people assume most people are, sharing their views in a “direct” way.

Now, in the former blog post I took a stance, a pro-POSIWID stance, in a way that is indistinguishable from the way I’ve claimed in this piece that strategic meme makers try to consciously steer discourse. I hope that by naming the game, to the best of my ability, I can show that I’m not a predator, but just a prey whose special interest is strategy.

But, then again, disguising themselves as prey is exactly what predators do.

Juicy juicy meta-rational memetic discourse 🤤

My take is: Even if “predator” signaling is effective for their intended ends:

1. The shifting dynamic will always eventually turn. What was once a powerful signal becomes a weakness (having undefended takes becomes a sign of untrustworthiness). But these people probably account for that and will switch to the next maximally successful strategy as needed.

2. Joe Hudson “Managing things creates a life you have to manage”: They’re creating a community in which “outcome-maxxing” is seen as valuable. Not only will they have to keep up with managing a convoluted style of discourse; they’re also treating culture as a complicated (AKA rational) system when it’s clearly a complex one (ie meta-rational, where any action will inevitably have unintended effects).

The first obvious problem I see with the strategy is simply that they’re polluting the commons with noise.

DefenderOfBasic has the best take on this imo, where in the long-term, communication strategies that are both open source and effective will win out, BECAUSE they’re effective. Strong signaling is great at achieving its stated aim, but inevitably it will run out of underlying truth “runway” and cede to strategies that are more efficient at generating/promoting/applying useful knowledge in the long term.

That said… memetics itself is also meta-rational, so there are times when strong signaling makes sense and I’m just being a little bitch for hating the player instead of the game.

¯\_(ツ)_/¯

the "go left / go right" diagram is 🎯. So, if you want to actually change people, the way to do it is by first detecting where they are, before giving them the advice. OR, just teach people to figure that out so that they filter all information through that.

The problem is right now the majority are still in this thing of, if the expert says "go left", and that clearly is going in a wrong direction, they think "well, I guess they are the expert, I should override the feedback right in front of me and listen to this guy instead". Terrible!!

I think we can supercharge this whole thing with "interactive essays". Basically ask people "what do you think X means" or how do you answer this, freeform, and an LLM can say "ok you already got it, this essay isn't for you, but you can read it anyway". Those answers also themselves act as data (you, and other readers, get to see what everyone thought going into the essay, and what their reaction was afterwards)